During the recently-held fourth-quarter earnings call, Elon Musk all but stated that Tesla holds a notable lead in the self-driving field. While responding to Loup Ventures analyst Gene Munster, who inquired about Morgan Stanley’s estimated $175 billion valuation for Waymo and its self-driving tech, Musk noted that Tesla actually has an advantage over other companies involved in the development of autonomous technologies, particularly when it comes to real-world miles.

“If you add everyone else up combined, they’re probably 5% — I’m being generous — of the miles that Tesla has. And this difference is increasing. A year from now, we’ll probably go — certainly from 18 months from now, we’ll probably have 1 million vehicles on the road with — that are — and every time the customers drive the car, they’re training the systems to be better. I’m just not sure how anyone competes with that,” Musk said.

To carry its self-driving systems towards full autonomy, Tesla has been developing its custom hardware. Designed by Apple alumni Pete Bannon, Tesla’s Hardware 3 upgrade is expected to provide the company’s vehicles with a 1000% improvement in processing capability compared to current hardware. Tesla has released only a few hints about HW3’s capabilities over the past months. That said, a patent application from the electric car maker has recently been published by the US Patent Office, hinting at an “Accelerated Mathematical Engine” that would most likely be utilized for Tesla’s Hardware 3.

In the patent’s description, Tesla notes that there is a need to develop “high-computational-throughput systems and methods that can perform matrix mathematical operations quickly and efficiently,” considering that current systems have notable limitations. These limitations become evident in computationally demanding applications, as described by Tesla in the following section.

“Computationally demanding applications, such as a convolution, oftentimes require a software function be embedded in computation unit 102 and used to convert convolution operations into alternate matrix-multiply operations. This is accomplished by rearranging and reformatting data into two matrices that then can be raw matrix-multiplied. However, there exists no mechanism to efficiently share or reuse data in scalar machine 100, such that data necessary to execute each scalar operation has to be re-stored and re-fetched from registers many times. The complexity and managerial overhead of these operations becomes significantly greater as the amount of image data subject to convolution operations increases.”

To address these limitations, Tesla’s patent application hints at the use of a custom matrix processor architecture. Tesla outlines its matrix processor architecture in the following section.

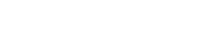

“FIG. 2 illustrates an exemplary matrix processor architecture for performing arithmetic operations according to various embodiments of the present disclosure. System 200 comprises logic circuit 232 234, cache/buffer 224, data formatter 210, weight formatter 212, data input matrix 206, weight input matrix 208, matrix processor 240, output array 226, post processing units 228, and control logic 250. Matrix processor 240 comprises a plurality of sub-circuits 242 which contain Arithmetic Logic Units (ALUs), registers and, in some embodiments, encoders (such as booth encoders). Logic circuit 232 may be a circuit that represents N input operators and data registers. Logic circuit 234 may be circuitry that inputs M weight operands into matrix processor 240. Logic circuit 232 may be circuitry that input image data operands into matrix processor 240. Weight input matrix 208 and data input matrix 206 may be stored in various types of memory including SRAM devices. One skilled in the art will recognize that various types of operands may be input into the matrix processor 240.”

By utilizing the system outlined in its recently published patent application, Tesla notes that its hardware would be able to support larger amounts of data. Such a setup also allows the system to be more efficient.

“Unlike common software implementations of formatting functions that are performed by a CPU or GPU to convert a convolution operation into a matrix-multiply by rearranging data to an alternate format that is suitable for a fast matrix multiplication, various hardware implementations of the present disclosure re-format data on the fly and make it available for execution, e.g., 96 pieces of data every cycle, in effect, allowing a very large number of elements of a matrix to be processed in parallel, thus efficiently mapping data to a matrix operation. In embodiments, for 2N fetched input data 2N2 compute data may be obtained in a single clock cycle. This architecture results in a meaningful improvement in processing speeds by effectively reducing the number of read or fetch operations employed in a typical processor architecture as well as providing a paralleled, efficient and synchronized process in performing a large number of mathematical operations across a plurality of data inputs.”

“In operation according to certain embodiments, system 200 accelerates convolution operations by reducing redundant operations within the systems and implementing hardware specific logic to perform certain mathematical operations across a large set of data and weights. This acceleration is a direct result of methods (and corresponding hardware components) that retrieve and input image data and weights to the matrix processor 240 as well as timing mathematical operations within the matrix processor 240 on a large scale.”

Tesla did not provide concrete updates on the development and release of Hardware 3 to the company’s fleet of vehicles during the fourth quarter earnings call. That said, Musk stated that FSD would likely be ready towards the end of 2019, though it would be up to regulators to approve the autonomous functions by then.

Back in October, Musk noted that Hardware 3 would be equipped in all new production cars in around 6 months, which translates to a rollout date of around April 2019. Musk stated that transitioning to the new hardware will not involve any changes with vehicle production, as the upgrade is simply a replacement of the Autopilot computer installed on all electric cars today. In a later tweet, Musk mentioned that Tesla owners who bought Full Self-Driving would receive the Hardware 3 upgrade free of charge. Owners who have not ordered Full Self-Driving, on the other hand, would likely pay around $5,000 for the FSD suite and the new hardware.

Tesla’s patent application for its Accelerated Mathematical Engine could be accessed here.